The Decorator Pattern is a design pattern used to dynamically add new functionality at runtime to an object, without altering their structure. This ability to modify an objects behaviour dynamically is the advantage over using class inheritance. When this need to dynamically alter an object's functionality does not exist than the decorator pattern is most likely over engineering a solution.

It is most appropriate for situations in which either you find yourself creating dozens of classes that are extremely similar, but have slightly variation functionality (known as class explosion), or in situations where their is a high likelihood that you will need to append existing functionality in the future while maintaining its original capabilities.

You can think of decorators as wrappers, for a concrete class, they can add functionality or modify existing behaviour.

In the above illustration, you can start to visualise how the decorator works, it wraps a concrete class and either modifies functionality or adds functionality, in theory you could have an unlimited number of wrappers. Below is a UML representation of how this works

Notice that the IDecorator interface, not only implements or extends either the base interface or concrete class, but it also has a reference to to that base definition or implementation. This creates both a "Has a" and "Is A" relationship with the base concrete class. This provides us with two advantages, firstly and most commonly this allows us to dynamically add functionality and behaviour to existing classes at runtime, the most common example of this beverage pricing and naming.

The most common example used to teach the decorator pattern is a rather contrived coffee house example. The coffeehouse example lends itself very well to illustrate how this pattern works, however it's not really how one would leverage this pattern in production code. Instead of the coffee example, I'm going to create a cocktail one. I realise that it sounds exactly the same, and to a degree it is. The reason I'm going with a cocktail example is because after the we understand the fundamentals of how the decorator pattern works. The cocktail example acts as an excellent jump off point to emphasise a real world problem which this pattern is meant to solve.

Let's start with an ingredient interface and class, each beverage will be made up of a combination of ingredients which will determine the price of the finished drink.

interface IIngredient {

name: string;

pricePerUnit: number;

unit: number

}

class Ingredient implements IIngredient {

name: string;

pricePerUnit: number;

unit: number

constructor(name: string, price: number, unit: number) {

this.name = name;

this.pricePerUnit = pricePerUnit;

this.unit = unit;

}

}

At the very base level, whether we are talking wine, cocktails, mocktails, coffee, or Italian sodas, every beverage is going to have a price, and a recipe, at this point it would make sense to include an array of Ingredients in our Beverage class, however it would completely defeat the need for the decorator pattern, for this reason, though this example articulates the use of the decorator pattern rather well, it's not a practical one.

abstract class Beverage {

abstract getPrice(): number;

abstract getRecipe(): string;

}

Notice that anything that inherits from the abstract Beverage class is going to have to implement both the getPrice and getRecipe functions. Next let's create a cocktail class which extends the abstract Beverage class and implements the abstract methods getPrice and getRecipe().

export default class Cocktail extends Beverage {

name: string;

constructor(name: string) {

super();

this.name = name;

}

getPrice(): number {

return 0;

}

getRecipe(): string {

return `A ${this.name} made with`;

}

}

With that complete we have a UML diagram like so

notice that our Ingredient interface and class are not really attached to anything, let's now introduce the idea of a decorator and fix that.

abstract class CocktailDecorator extends Beverage {

base: Beverage;

ingredient: IIngredient;

constructor(base: Beverage, name: string, price: number, oz: number) {

this.base = base;

this.ingredient = new Ingredient(name, price, oz);

}

getPrice = (): number => {

const price = this.ingredient.ozPrice;

const quantity = this.ingredient.ozQuantity

const thisPrice = price * quantity;

const basePrice = this.base.getPrice();

return basePrice + thisPrice;

}

getRecipe = (): string => {

const prefix = this.base.getRecipe();

const conjunction = this.base instanceof Cocktail ? ":" : ",";

const quantity = this.ingredient.ozQuantity;

const parts = `${quantity < 1 ? 'part' : 'parts'}`

const name = this.ingredient.name;

return `${prefix}${conjunction} ${quantity} ${parts} ${name}`;

};

}

Here we have this interesting "is a" and "has a" relationship, this is what allows us to wrap not just anything that inherits from the abstract Beverage class, but it also lets us modify the functionality of anything defined in the Beverage class. notice that our decorator uses the ingredient to calculate the price as well as the recipe.

Lets create three variations of our decorator

export class VodkaDecorator extends CocktailDecorator {

constructor(base: Beverage, oz: number) {

super(base, "Vodka", 4, oz);

}

}

export class GinDecorator extends CocktailDecorator {

constructor(base: Beverage, oz: number) {

super(base, "Gin", 5, oz);

}

}

export class VermouthDecorator extends CocktailDecorator {

constructor(base: Beverage, oz: number) {

super(base, "Vermouth", 1, oz);

}

}

Now we can instantiate a either a gin or vodka martini like so

const vodkaMartini = new VermouthDecorator(new VodkaDecorator(new Cocktail("Vodka martini"), 2), .25);

const ginMartini = new VermouthDecorator(new GinDecorator(new Cocktail("Gin martini"), 2), .25);

This demonstrates how we can use the decorator pattern to append functionality to an existing base implementation, notice that in this case we create this daisy chain of classes which implement and have an instance of the Beverage class, down to the base concrete implementation which only implements the abstract Beverage class.

One thing to keep in mind is that nesting order matters, when we call

console.log(vodkaMartini.getRecipe());

console.log(vodkaMartini.getPrice());

console.log(ginMartini.getRecipe());

console.log(ginMartini.getPrice());

Each getRecipe and getPrice function is called from the outer down to the inner wrapper, this is why when we defined the getRecipe class in the base CocktailDecorator we leverage this idea of a prefix, so that the concretes GetRecipe implementation would be at the front of the output.

One thing to keep in mind when using the decorator pattern, any functionality that has been altered by the decorators is called from the outer ring down to centre.

Our final UML looks something like this:

As mentioned before, this is a contrived example and though it illustrates how the decorator pattern works, it does not provide a realistic example of what context to actually use it in. For a problem like this, it would be far more reasonable for the cocktail class to be instantiated with an array of ingredients with an option to add/remove ingredients; so let's refactor our code to more appropriate version

notice how we've simplified our models

interface IIngredient {

name: string;

ozPrice: number;

ozQuantity: number;

}

abstract class Priced {

abstract getPrice(): number;

abstract getDescription(): string;

}

export class Ingredient extends Priced implements IIngredient {

name: string;

ozPrice: number;

ozQuantity: number;

constructor(name: string, ozPrice: number, ozQuantity: number) {

super();

this.name = name;

this.ozPrice = ozPrice;

this.ozQuantity = ozQuantity;

}

getPrice(): number {

return this.ozPrice * this.ozQuantity;

}

getDescription(): string {

const quantity = this.ozQuantity;

const parts = `${quantity < 1 ? 'part' : 'parts'}`

const name = this.name;

return `${quantity} ${parts} ${name}`;

}

}

export default class Cocktail extends Priced {

name: string;

ingredients: Ingredient[]

constructor(name: string, ingredients:Ingredient[]) {

super();

this.name = name;

this.ingredients = ingredients;

}

getPrice(): number {

return this.ingredients.reduce((sum, item)=> sum + item.getPrice(), 0);

}

getDescription(): string {

return this.name + ': ' + this.ingredients.reduce((recipe, item)=> recipe + `, ${item.getPrice()}`, "");

}

addIngredient (ingredient: Ingredient) {

const i = this.ingredients.find(i => i.name = ingredient.name);

if(i)

i.ozQuantity += ingredient.ozQuantity;

else

this.ingredients.push(ingredient);

}

removeIngredient(ingredient: Ingredient){

const i = this.ingredients.findIndex(i => i.name = ingredient.name);

if(i > -1) {

const t = this.ingredients[i];

t.ozQuantity -= ingredient.ozQuantity;

if(t.ozQuantity < 0)

this.ingredients.splice(i,1);

}

}

}

we've significantly reduced the complexity of our code, now let's create a menu object; makes sense right, it's reasonable for a predefined menu of cocktails to exist for patrons to choose from with the ability to modify them, swap out the alcohol for a preferred brand, or add a double shot.

class Menu{

cocktails: Cocktail[]

constructor(cocktails: Cocktail[]){

this.cocktails = cocktails;

}

listCocktails(): [string, number][]{

return this.cocktails.map(c=> [c.getDescription(), c.getPrice()])

}

}

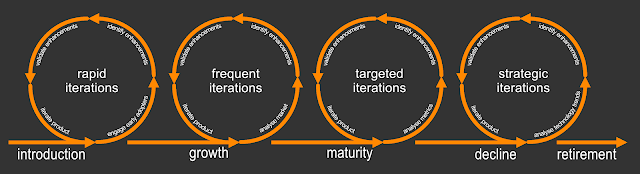

Let's say we go live with our solution, and it works as far as we know, we can define cocktails, their ingredients, and generate a menu. Now hypothetically let's say someone orders a dirty martini with 10 extra shots of vodka, well that's fine and dandy, but what about glasses? don't they have a capacity? now our example is rather trivial and i would say forget the open/closed principle and just refactor the code; and in this context no one would disagree with me, however in financial software that has thousands of legacy lines of code, no one would let you make that change for fear of introducing more bugs.

Now for an intricate situation like that it would make sense to introduce a decorator, this way we can ensure that the original code continues to function, but we can now extend our system without risking legacy code.

export class GlassDecorator extends Cocktail{

base: Cocktail;

maxVolume: number;

constructor(base: Cocktail, maxVolume: number) {

super(base.name, base.ingredients);

this.base = base;

this.maxVolume = maxVolume

}

getFilledVolume(): number{

return this.base.ingredients.reduce((sum, i)=> sum + i.ozQuantity, 0);

}

override addIngredient(ingredient: Ingredient): void {

let filledVolume = this.getFilledVolume();

if(ingredient.ozQuantity + filledVolume > this.maxVolume)

throw Error("the glass does not have enough volume");

this.base.addIngredient(ingredient);

}

}

Our final UML looks like the following.

We can now use our Cocktail class and Glass decorator like so, :

const ginMartini = new Cocktail("gin martini", [gin, vermouth]);

const glass = new GlassDecorator(ginMartini, 5);

glass.addIngredient(new Ingredient("ice", 0, 4))

ginMartini.getDescription();

ginMartini.getPrice();

In conclusion the decorator pattern is useful when you want to add functionality to legacy code, but you want to mitigate any chance of impacting existing code. The change in behaviour impacts from the outside in. meaning that any function that is overridden or appended is first executed on the outer ring of decorators first.

A quick note on UML class diagrams, I have been showing code before the Class diagram. This is not how my actual or anyones work flow should go, software engineers use UML diagrams to plan out their code, to optimally structure it before writing code.